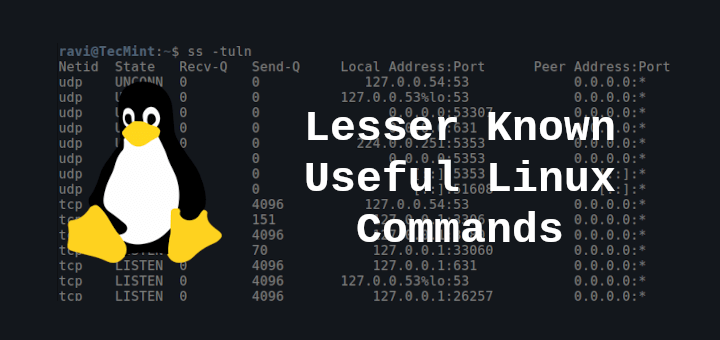

Adapting to the command line or terminal can be challenging for beginners learning Linux. As the terminal provides more control over a Linux system compared to GUI programs, one needs to become accustomed to executing commands in the terminal.

Therefore, to memorize various commands in Linux, it is essential to use the terminal regularly. This practice allows users to understand how commands function with different options and arguments, facilitating a more effective learning experience.

Please review our previous articles in this Linux learning series.

In this article, we will explore tips and tricks for using 10 commands to work with files and manage time in the terminal.

File Types in Linux

In Linux, everything is considered as a file, your devices, directories, and regular files are all considered as files.

There are different types of files in a Linux system:

- Regular files may include commands, documents, music files, movies, images, archives, and so on.

- Device files are used by the system to access your hardware components.

There are two types of device files that represent storage devices such as harddisks, they read data in blocks and character files read data in a character-by-character manner.

- Hardlinks and softlinks: they are used to access files from anywhere on a Linux filesystem.

- Named pipes and sockets: allow different processes to communicate with each other.

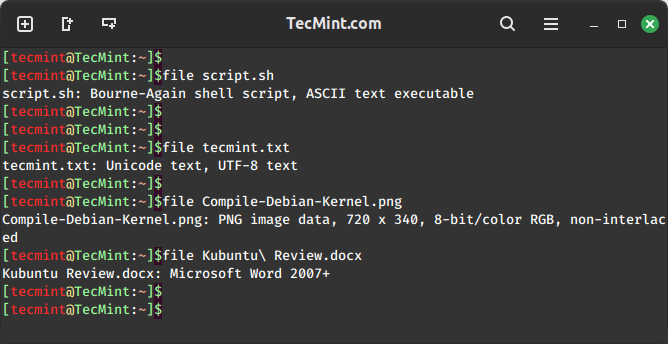

1. Find the Type of File in Linux

You can determine the type of a file by using the file command as follows. The screenshot below shows different examples of using the file command to determine the types of different files.

file filename

2. Find File Type Using ‘ls’ and ‘dir’ Commands

Another way of determining the type of a file is by performing a long listing using the ls and dir commands.

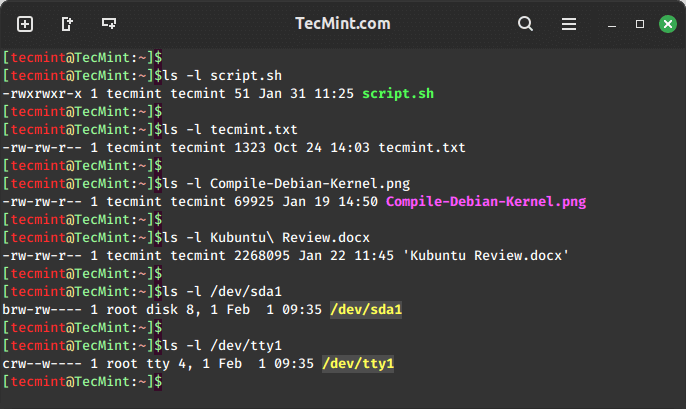

ls Command

Using ls -l to determine the type of a file, block, and character files. When you view the file permissions, the first character shows the file type, and the other characters show the file permissions.

ls -l filename ls -l /dev/sda1 ls -l /dev/tty1

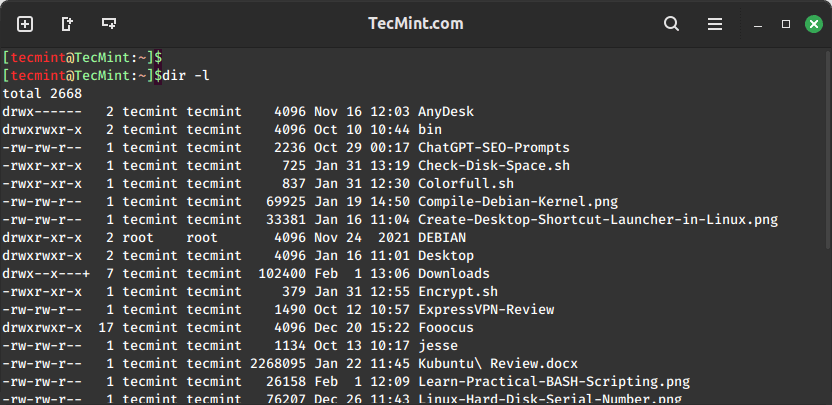

dir Command

Using dir -l to determine the type of file.

dir -l

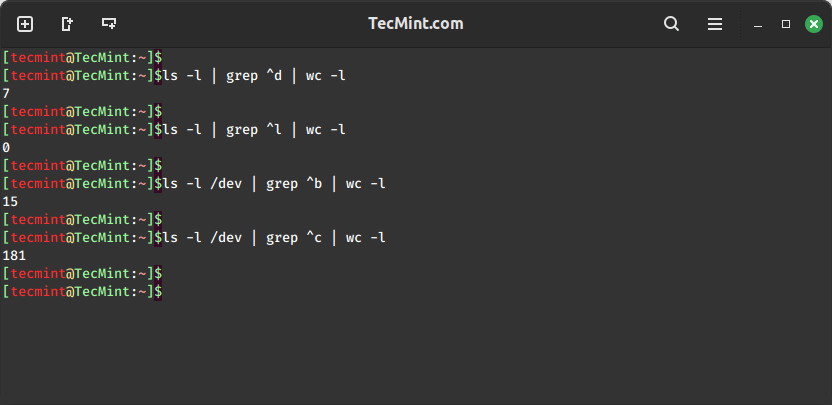

3. Count the Number of Files in a Directory

Next, we shall look at tips on counting a number of files of a specific type in a given directory using the ls, grep, and wc commands. Communication between the commands is achieved through named piping.

- grep – command to search according to a given pattern or regular expression.

- wc – command to count lines, words, and characters.

Count the Number of Regular Files

In Linux, regular files are represented by the – symbol.

ls -l | grep ^- | wc -l

Counting Number of Directories

In Linux, directories are represented by the d symbol.

ls -l | grep ^d | wc -l

Counting the Number of Symbolic and Hard Links

In Linux, symbolic and hard links are represented by the l symbol.

ls -l | grep ^l | wc -l

Counting the Number of Block and Character Files

In Linux, block and character files are represented by the b and c symbols respectively.

ls -l /dev | grep ^b | wc -l ls -l /dev | grep ^c | wc -l

4. Find Files in Linux

Next, we shall look at some commands one can use to find files on a Linux system, these include the locate, find, what’s, and which commands.

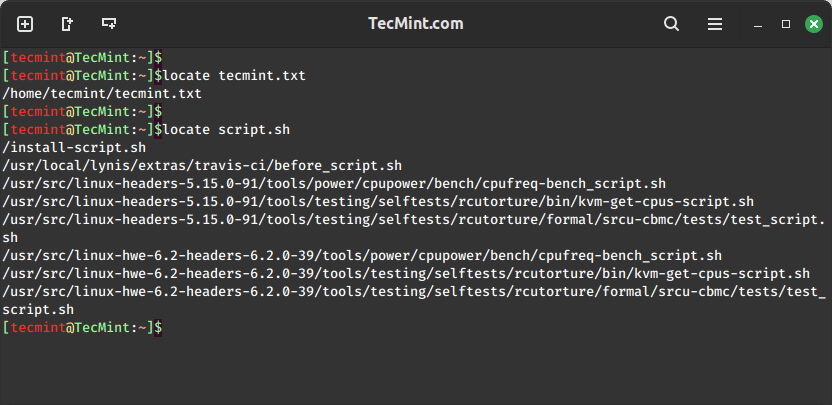

Find Files Using locate Command

The locate command is used to find the location of files and directories on a system by searching a pre-built database.

locate filename

The locate command is fast and efficient but relies on a periodically updated database.

sudo updatedb

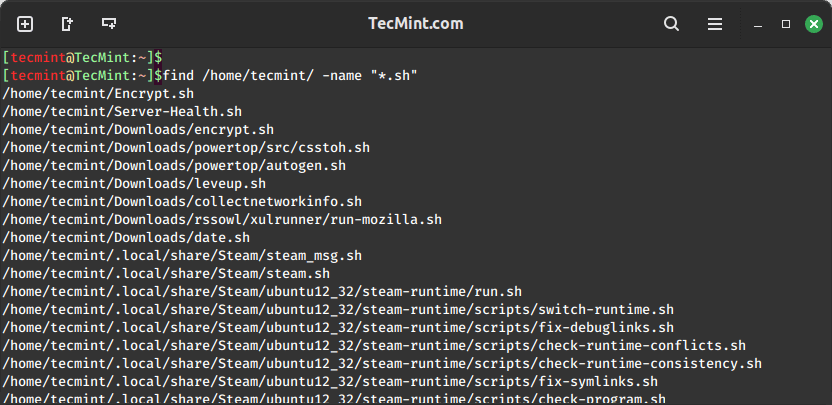

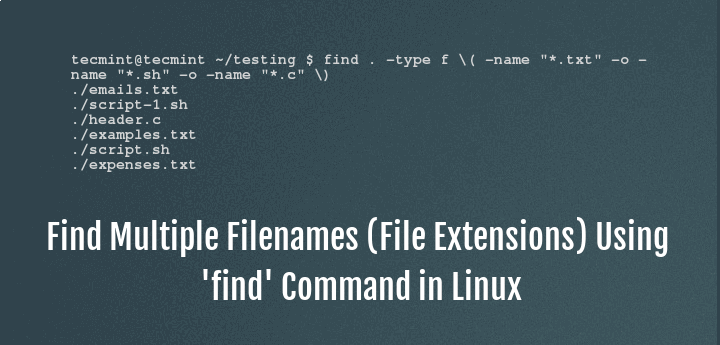

Find Files Using find Command

The find command is used to search for files and directories in a directory hierarchy based on various criteria.

find /home/tecmint/ -name "*.sh"

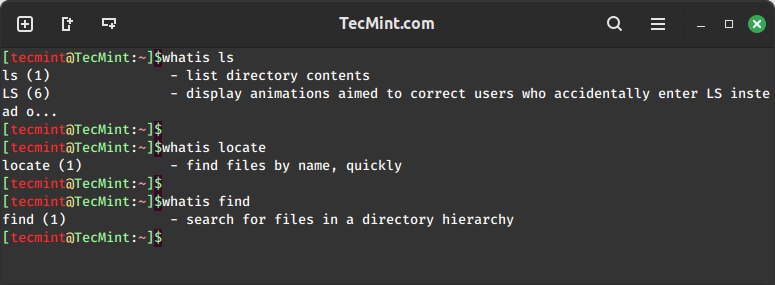

Find Description of Command

The `whatis` command is utilized to provide a concise description of a command, and it also locates configuration files and manual entries associated with that command.

whatis ls whatis locate whatis find

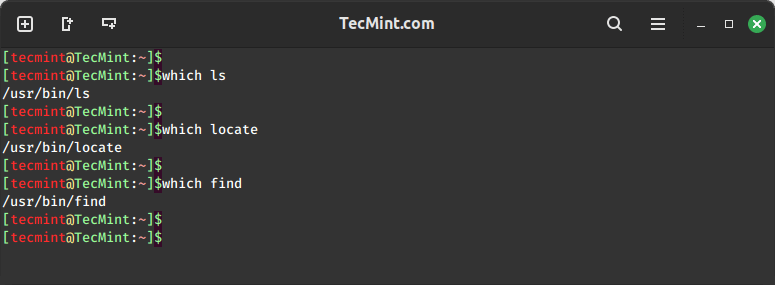

Find the Location of a Command

The which command is used to print the location of the executable file associated with a given command.

which ls which locate which find

5. Set the Date and Time on Linux

When operating in a networked environment, it is good practice to maintain accurate time on your Linux system. Certain services on Linux systems require the correct time for efficient network operation.

We will explore commands that you can use to manage time on your machine. In Linux, time is managed in two ways: system time and hardware time*

The system time is managed by a system clock and the hardware time is managed by a hardware clock.

date Command

To view your system time, date, and timezone, use the date command as follows.

date

Set your system time using date -s or date --set="STRING" as follows.

sudo date -s "12:27:00" OR sudo date --set="12:27:00"

You can also set the time and date as follows.

sudo date 010912302024

cal Command

Viewing current date from a calendar using cal command.

cal

hwclock Command

View hardware clock time using the hwclock command.

sudo hwclock

To set the hardware clock time, use hwclock --set --date="STRING" as follows.

The system time is set by the hardware clock during booting and when the system is shutting down, the hardware time is reset to the system time. Therefore when you view system time and hardware time, they are the same unless when you change the system time. Your hardware time may be incorrect when the CMOS battery is weak.

You can also set your system time using time from the hardware clock as follows.

sudo hwclock --hctosys

It is also possible to set hardware clock time using the system clock time as follows.

sudo hwclock --systohc

To view how long your Linux system has been running, use the uptime command.

uptime uptime -p uptime -s

Summary

Understanding file types in Linux is a good practice for beginners, and managing time is crucial, especially on servers, to handle services reliably and efficiently. I hope you find this guide helpful. If you have any additional information, please don’t forget to post a comment.

Thank you for this article. Tell me the bash color scheme that you are using here ? PS1

@Dmitry

Welcome, however, because the article is relatively old, i can’t recall exactly the bash color scheme i used here. But keep testing the various bash color schemes available, you’ll land on it. And once i figure it out, i’ll let you know.

Thanks for following us.